In May 2018, QUU and Parasyn commenced their journey of liberating QUU’s disparate SCADA and other process information system data into one centralised industrial data lake. Visit our Industrial Automation page here for more information about our Industrial Automation services.

Industrial Data Lake at QUU

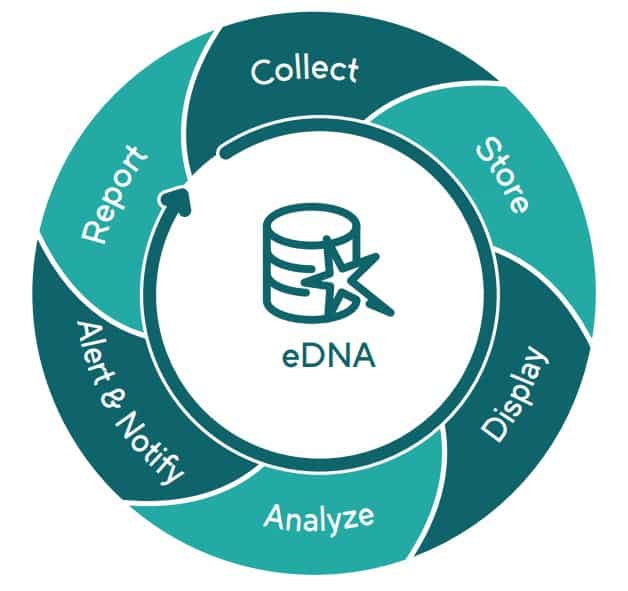

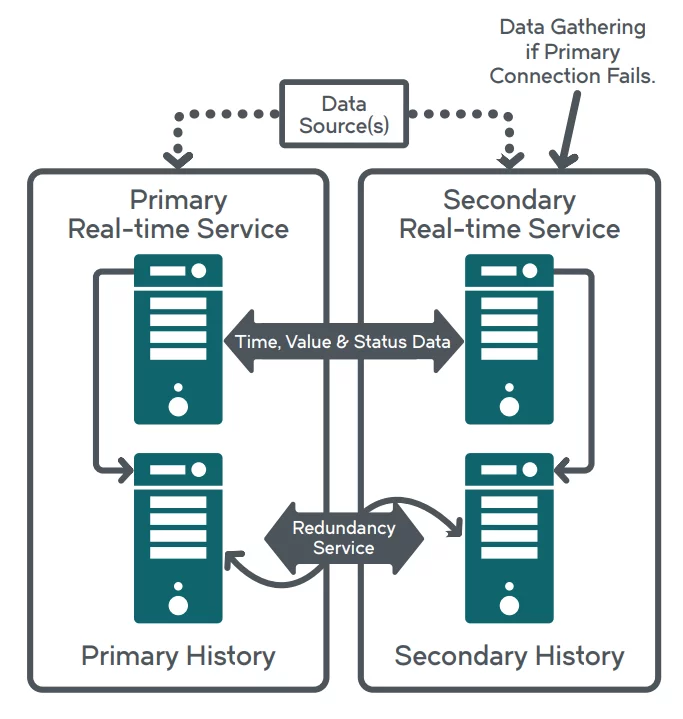

The chosen technologies for this undertaking were selected from the Aveva APM product suite including eDNA Historian and Intelligence. On October 21st 2019, after standing up two data centres, managing 75 Terabytes of historical source data, testing a fully redundant infrastructure and server software applications, the 500,000-point Enterprise Historian system went live actively collecting real time data.

The configured data sources currently include CitectSCADA (multiple versions), ClearSCADA, Mosaic, Radtel, LIMS, Elpro and BOM. The first data source (data collector) began storing real time data from a regional SCADA system in December 2018. Systematically other sites were added, the secondary data centre activated, and system redundancy was tested to make the eDNA Enterprise Historian system a highly available Big Data repository.

When the system went live, Parasyn was part way through training over 200 power users on how to best use the process historian Client Tools and Web interface. After formal training and hands on practice, the Power Users in turn become the champions of the system to ensure there are plenty of process experts available to help the organisation maximise its use of real time and historical data; data which highlights how their assets perform during normal operation and during unplanned events.

Surprised Users

So, what were the real winners for QUU?

We considered feedback and made observations about what surprised the stakeholders, users and trainees. Here is a summary of what we heard:

- Do you mean I can get data about assets in both networks and plant in the one place?

- Is it that easy for me to export data?

- Can I really replay what happened in the system even though the data is coming from different unrelated systems?

- Is this data updating in real time? I thought this was just a database!

Technical Challenges

In more technical terms, the enterprise historian system with all its components provides a number of important benefits which in some cases are even more important for a large organisation like QUU and essential for one which is an amalgamation of other business. The amalgamation meant QUU has inherited other organisation’s standards, conventions, assets, asset metadata and related data systems; as is! Inheriting other systems and creating a unified user interface is challenging because starting from scratch, is never feasible.

For large organisations, the user base, stakeholder group and business processes are wide and varied. For small organisations it may be simple to engage with the smaller userbase and provide training and support. It may also be simple to update Standard Operating Procedures (SOP) particularly when the SOP library is tiny. To the contrary QUU’s approach to change management was BIG, including technical, executive and business user groups. Change Management, a fundamental element of all digital transformation projects (and some would argue the most important aspect), was key to a successful outcome for the Enterprise Historian implementation for QUU. It was evident when new requirements were tabled for discussion or risk surfaced due to taking a system’s engineering approach to delivery, stakeholders were consulted during the entire journey. This ultimately led to an outcome where the stakeholders own what is provided rather than the project team being “advocates” or “defenders” of the new tech.

Technical Benefits

The new Big System has many features some of which are highlighted following to demonstrate what happens when enterprise requirements are successfully gathered and then implemented into a single system to deliver coordinated outcomes;

- Single centralised data store which bridges across all infrastructure (networks and plant). This means simplified data access, administration, analytics, enhanced report writing and a platform for machine learning capability and probably other technologies not yet considered.

- Uniform data point configuration using a data structure or hierarchy that makes sense to the entire QUU group, not only a 3rd party contractor or any one stakeholder group who creates their own metadata for the occasion. A Uniform data point configuration means users can access reports and other tools and find information in an organised approach rather than based on personal and specific plant learned knowledge. Standards or hierarchy can be used to search, locate and consume data. No data specialists are required. You don’t need to be an operator to get started.

- Native interfaces for ClearSCADA and CitectSCADA the chosen platforms for QUU Network SCADA and Plant HMI.

- Tools for the backloading and maintenance of historical data from other data sources (legacy systems) means years of Old System Data can be morphed into the new system and referenced any time and correlated with other events, i.e. weather, operational controls, planning, regulatory changes.

Why is an enterprise class historian required for QUU?

Putting sheer size to the side, to keep this simple and from the bottom up in order of performance, there are relational databases, plant process historians, relational databases in the cloud (often clustered) and then on-premise historians. Some come at a significant cost in terms of payload or transaction costs. There are only a couple of choices that won’t send you either broke or to the asylum with frustration waiting for basic data about what happened last year!

In terms of system architecture, the eDNA Solution provides: Speed, History Storage Redundancy, support for data source redundancy, application server redundancy (calculations, events, etc), and a distributed architecture with buffered field data collectors including secure transport and storage. The technology and architecture is flexible and has been tailored for the existing QUU source technologies (old, very old and new) and plans are in place for it to be adjusted as SCADA systems are upgraded, added to or replaced in the near future. The eDNA solution also provides economical data storage which is highly compressed and is also efficient in terms of data retrieval.

What next for liberated Big Data?

For a generation, the challenge has been to capture enough data, store it and then be able to retrieve in a timely fashion relevant information as it relates to particular assets. With Digital Transformation initiatives driving process improvement, asset optimisation and workforce efficiency gains, quality data is essential to move to decisions based on empirical evidence. The enterprise historian and intelligent data repositories are a rudimentary building block for taking this transformation journey, but it is only as good as the data itself and how the data is organised. If either the data is poor or if the data cannot be organised and interpreted with meaning, then results will be poor no matter how good the assets themselves may be.

Many Big Data platform providers and researchers are now concerned that the adoption rate of Artificial Intelligence models into production environments is less than 10%, meaning the best intentions are not leading to long term results. The data lake itself, the technology performance and the availability of infrastructure does not produce business results, people do. Engagement, technology take up and change management have always been the decider, particularly for software solutions where the data itself is more complex than the temperature of your fridge and requires some mental effort to interpret in context. The tools are better and the information is definitely richer with information, but somehow that still needs to connect with business intent.

Projects without interested users are the mothball big data solutions of the 2020’s. What is next is decided by how you start and who gets involved. We have the technology, but do we have the right plan and the people to carry it through?

To see more about the application of technology see: https://www.parasyn.com.au/articles/